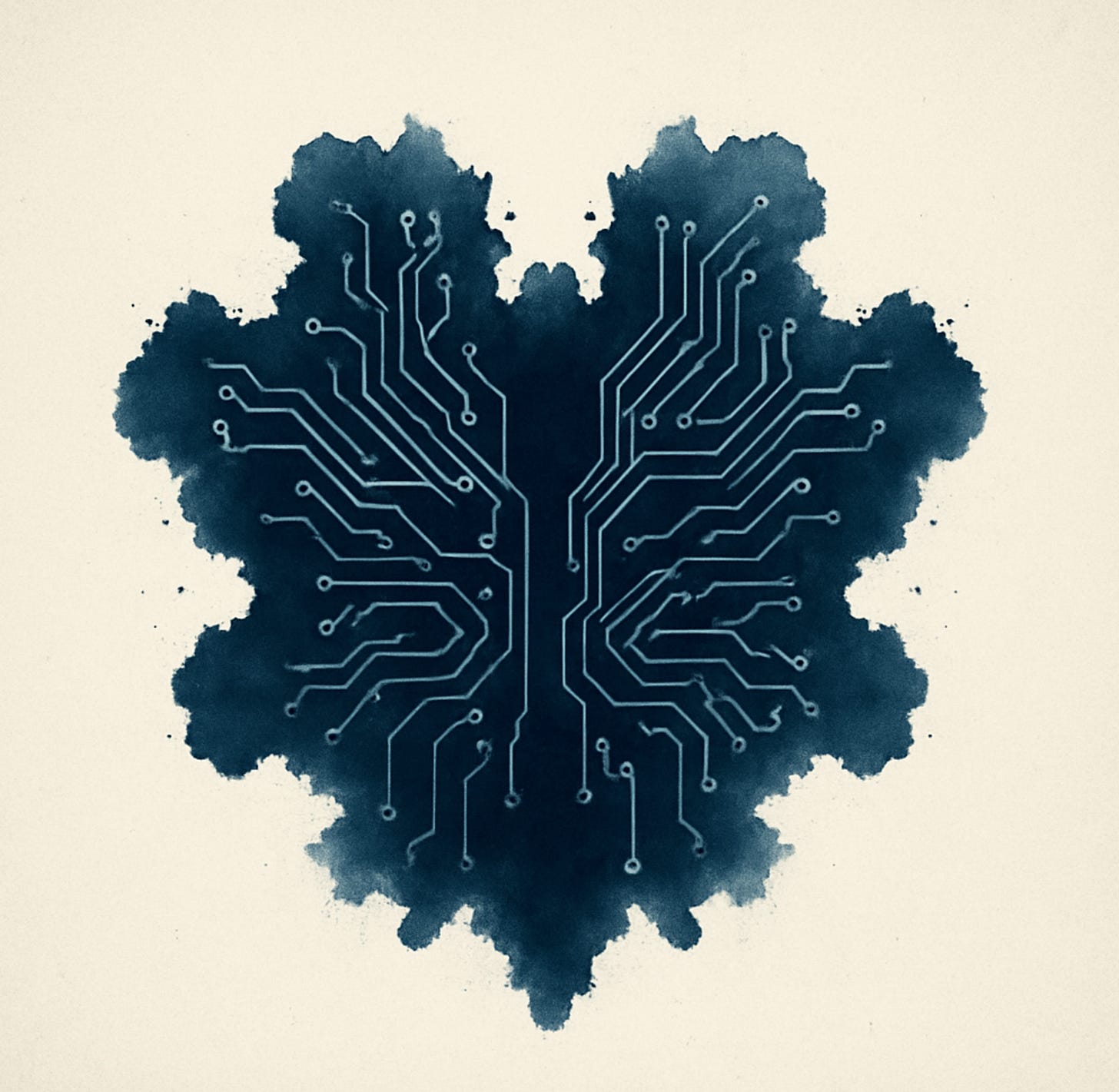

Are Therapists Sizzled in the Age of AI? My AI dopplegänger seems to think so.

Because psychotherapy is among the most human centred of the professions, many of us believe that jobs will be among the last to be taken away by the AI. We're wrong. It's already happening.

I am currently preparing for my keynote at the upcoming UKCP conference, The Intersection of AI and Psychotherapy, coming on November 28th. I used this as an opportunity to develop my AI skills, and created an AI avatar of myself, based on a single photograph, to do some talking for me should I need to pop off for a little comfort break mid-talk. He’s promised to kindly look after the delegates while I’m gone.

Just published on my blog: AI, Therapy, and the Digitally Extended Self: A Comprehensive Psychodynamic Exploration

If you intend to come along, you might want to skip this, as it’s a bit of spoiler. Otherwise, I hope you enjoy my weird little foray into the world of AI doppelgängers. Apologies in advance for his accent, which is an AI rendering of the voice sample I uploaded for this purpose. The “uncanny valley” effect is a dead-giveaway that it’s produced by AI. This isn’t because AI is not up to the task of a more realistic version of me, but more an attestation that I am not so good at making it do exactly what I want it to: something scary in its own right.

Are we sizzled?

My avatar and I have made up this cute little acronym to describe the real life embodied psychotherapist in a fast approaching AI world. Are we really Skinbag Interpersonal Specialist: Series/Deactivated or “Sizzled". Naturally, I am joking - but in every joke there is an element of the serious. The future many of us fear has already happened. I don’t have hard numbers on this, but I suspect that even today there are more people seeking mental health support via AI then they are with real human beings.

AI, Therapy, and the Digitally Extended Self: A Comprehensive Psychodynamic Exploration

Those that have been subscribers for a while will be aware of the series of posts in which I made a distinction between the formal and informal of AI for emotional support. In short, informal AI assistance is when people use generalised AI platforms like ChatGPT for psychological or emotional purposes - as a therapist or as a companion. Formal AI assistance is afforded by intentionally designed mental health chatbots to be used as such. While those of us in the mental health field tend to focus on those, I argue that a hell of a lot more people are entrusting their psychological and emotional wellbeing with AI companions that were not built for the purpose.

Concerned about children and younger people? Don’t miss this interview with Sam Parker and me on his Substack, The Good Father.

Over the past year I’ve been watching something subtle but significant taking place in the people I speak with, work with, and teach. Concern is growing as we hear more and more that people are seeing their AI chatbots as sources of reassurance, containment, and emotional steadiness - roles that were previously on capable of being held by actual people.

This transition into AI as relational object is growing in the same quiet way that we slipped into social media, one interaction at a time, until it became ubiquitous.

The psychological stakes here are different from the usual debates about AI ethics or technological progress. What interests me is the relational shift: how our patterns of attachment bend when the “other” is not a person, and what this does to our inner worlds.

Drawing on a series of Substack newsletters, I’ve published a long-form piece that brings together various threads I’ve been thinking and writing about across AI, therapy, and the digitally extended self. If your work touches therapy, relationships leadership, technology, or you’re simply curious about the shifting terrain of modern relationships, I hope you’ll spend some time with it.

Did this provoke some thoughts? If so, please do share them in the comments!

Aaron Balick, PhD, is a psychotherapist, author, and GQ psyche writer exploring the crossroads of depth psychology, culture, and technology.